Department News

[Electronic Newspaper] Seoul National University Professor Dongjun Lee's team developed hand gesture tracking technology in var

Author

yeunsookim

Date

2021-11-02

Views

522

Seoul National University Professor Dongjun Lee's team developed hand gesture tracking technology in various tasks and environments

< (From left to right) Seoul National University College of Engineering, Department of Mechanical Engineering, Ph.D. Yongseok Lee ((current) Samsung Research), Researcher Wonkyung Do (currently Stanford University), Researcher Hanbyeol Yoon ((currently UCLA)), Researcher Jinuk Heo, Researcher WonHa Lee ((current) Samsung Electronics), Professor Dongjun Lee>

Seoul National University College of Engineering (Dean Byoungho Lee) announced the 30th that Professor Dongjun Lee's research team in the Department of Mechanical Engineering has developed a robust and accurate hand motion tracking technology, Visual-inertial skeleton tracking (VIST).

This technology has been recognized for research that suggests the possibility of using hands and fingers in user interfaces in various industries such as virtual and augmented reality, smart factories, and rehabilitation as well as robots. It was published September 29th in the international journal 'Science Robotics'.

The colorful and sophisticated use of hands and fingers is the most important human characteristic, but it is difficult to track and implement in real digital environments. In the current robot control or virtual reality user interface,ly movement the tablet plane can be controlled, or the avatar is controlled with a fist while clenching the controller. You can't take advantage of the rich hand and finger movements that move in three dimensions.

This technology has been recognized for research that suggests the possibility of using hands and fingers in user interfaces in various industries such as virtual and augmented reality, smart factories, and rehabilitation as well as robots. It was published September 29th in the international journal 'Science Robotics'.

The colorful and sophisticated use of hands and fingers is the most important human characteristic, but it is difficult to track and implement in real digital environments. In the current robot control or virtual reality user interface,ly movement the tablet plane can be controlled, or the avatar is controlled with a fist while clenching the controller. You can't take advantage of the rich hand and finger movements that move in three dimensions.

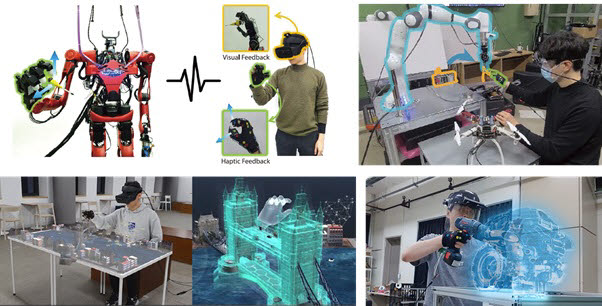

<The hand motion tracking VIST system and motion concept developed by the research team, image=Seoul National University College of Engineering>

The VIST technology developed by Professor Dongjun Lee's research team is the first technology to solve the limitations of current technology. A glove with seven inertial sensors and 37 printable markers was complementarily fused with information from a camera worn the head. It can track hand and finger motions robustly and accurately even in the face of image blocking that occurs frequently when manipulating objects, geomagnetic disturbances that occur near electronic equipment and steel structures, and contact caused by wearing scissors, electric drills, or tactile equipment.

In hand gestures where many fingers move quickly and complexly, the camera misses the marker, making tracking difficult. The research team improved the camera marker tracking performance by using the inertial sensor and at the same time corrected the divergence of the inertial sensor information by using the camera information. The core of VIST is “a technology to track multiple skeletons based an image inertial sensor tightly-coupled fusion” that achieves both the robustness and accuracy of hand motion tracking.

In hand gestures where many fingers move quickly and complexly, the camera misses the marker, making tracking difficult. The research team improved the camera marker tracking performance by using the inertial sensor and at the same time corrected the divergence of the inertial sensor information by using the camera information. The core of VIST is “a technology to track multiple skeletons based an image inertial sensor tightly-coupled fusion” that achieves both the robustness and accuracy of hand motion tracking.

<Example of hand motion tracking VIST technology application: remote control, collaborative robot, swarm control, virtual/augmented reality>

Professor Lee said, “VIST hand motion tracking technology will enable intuitive and efficient control of robotic hands, cooperative robots, and swarm robots using hands and fingers, and at the same time enable natural interactions in virtual reality, augmented reality, and metaverse.” He added “Compared to existing products, it has a great potential for commercialization in the future due to its lighter weight (55g), lower price (material cost of $100), high accuracy (tracking error of about 1cm) and durability (washable) compared to existing products.”

This study was carried out with support from the Ministry of Science and ICT's Basic Research Project (middle-level research) and the National Research Foundation's Leading Research Center.

This study was carried out with support from the Ministry of Science and ICT's Basic Research Project (middle-level research) and the National Research Foundation's Leading Research Center.

Shortcut to article: https://www.etnews.com/20210930000170

YouTube:

Paper Information: Y. Lee, W. Do, H. Yoon, J Heo, W. Lee and DJ Lee, Visual-Inertial Hand Motion Tracking with Robustness against Occlusion, Interference, and Contact, Science Robotics, 6(58), 2021